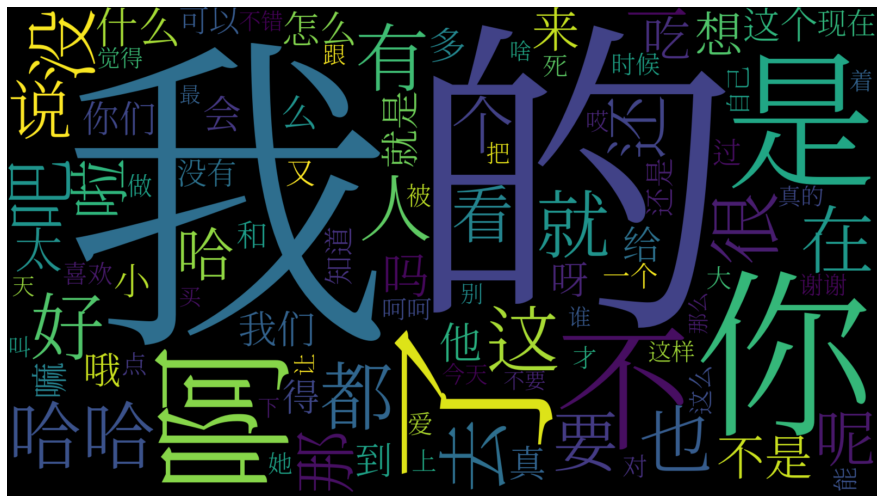

The Most Commonly Used Chinese Words

I think the most effective way to learn a language is to prioritize learning the day-to-day most frequently used words. Picking words to study in order of frequency is the optimal way to maximally increase your marginal understanding of the language for each successive word you learn.

To that end, I took a dataset of Weibo (China’s Twitter) posts and ranked all words that appeared in the dataset order of frequency.

You can download a CSV of the 2000 most common words here.

The CSV contains a “back” column so you can also load it into Anki and use it as flashcards. The dataset is thanks to Dr. Minlie Huang at Tsinghua University. You can find the dataset here.

Let me know if this is useful!

Code to Generate Top Word List

If you’re interested in how this was generated and want to remix the code, it’s here on Colab, as well as written out below.

import pandas as pd

!pip install chinese

!pip install pinyin

Collecting chinese

[?25l Downloading https://files.pythonhosted.org/packages/15/fe/35c1cd7792f0c899fbeae66d35491721cae6be6d8a128d4f77e6e3479b3a/chinese-0.2.1-py3-none-any.whl (12.6MB)

[K |████████████████████████████████| 12.6MB 2.8MB/s

[?25hCollecting pynlpir

[?25l Downloading https://files.pythonhosted.org/packages/7c/66/79d353119143f92fdf80aea0e8b5b8289baf60708a3202fc7a4d3a530d0e/PyNLPIR-0.6.0-py2.py3-none-any.whl (13.1MB)

[K |████████████████████████████████| 13.1MB 1.3MB/s

[?25hRequirement already satisfied: jieba in /usr/local/lib/python3.6/dist-packages (from chinese) (0.42.1)

Requirement already satisfied: click in /usr/local/lib/python3.6/dist-packages (from pynlpir->chinese) (7.0)

Installing collected packages: pynlpir, chinese

Successfully installed chinese-0.2.1 pynlpir-0.6.0

Requirement already satisfied: pinyin in /usr/local/lib/python3.6/dist-packages (0.4.0)

from chinese import ChineseAnalyzer

analyzer = ChineseAnalyzer()

result = analyzer.parse('就')

print(result.tokens())

print(result.pinyin())

import pinyin

import pinyin.cedict

['就']

jiù

!curl -O http://coai.cs.tsinghua.edu.cn/media/files/ecm_train_data.zip

!unzip ecm_train_data.zip

!du -csh *

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 44.7M 100 44.7M 0 0 294k 0 0:02:35 0:02:35 --:--:-- 218k

Archive: ecm_train_data.zip

inflating: train_data.json

45M ecm_train_data.zip

55M sample_data

126M train_data.json

225M total

import json

with open('train_data.json') as f:

weibo_posts = json.load(f)

print('Num posts:', len(weibo_posts))

Num posts: 1119207

# Preview Weibo posts.

for post in weibo_posts[:10]:

print(post)

[['希望 九 哥 日日 开心 同 我 打 羽毛 波\n', 1], ['加 埋 哦 ! 句 需要 运动 !\n', 0]]

[['哈哈 、 生日 快乐 。 我 地 居然 同一 日 生日\n', 5], ['哈哈 … 生日 快乐 。\n', 5]]

[['有人 问 , 何时 去 北京 演出 呢 ? 正好 你 回答 一下 。\n', 0], ['北京 ? 争取 2011 年底 。 回答 完毕\n', 0]]

[['这 美 ?\n', 1], ['哈哈 ~ ~\n', 5]]

[['一定 要 支持 ~\n', 1], ['谢谢 支持 祝 你 好运 哦\n', 1]]

[['肿 么 呢 ? 青奈 滴 ? 吃 点 退烧 药\n', 3], ['这 都 不是 重点 , 马 春然 完美 演出 才 是 正经\n', 1]]

[['粗粗 吧 , 我 觉得 还 好 啊 。 你 回来 没 啊 上报 哥 !\n', 2], ['怎么 叫 上报 哥 啊 看 我 八 月 的 排班 怎样 有 时间 就 回去\n', 4]]

[['我 听 在 块\n', 0], ['今晚 你们 全都 要 来 老 公司 么 ?\n', 4]]

[['我 想 我 会 一直 孤单 , 过 着 孤单 的 生活\n', 2], ['原来 都 是 或 曾经 孤单 , 或 正在 孤单 的 主儿 。\n', 2]]

[['混 得 好 我 就 不 回来 了 , 你们 在 天朝 要 坚强 。 到 时候 一起 接 你们 过去 。\n', 0], ['要 奸 墙 , 不要 被 强奸 。\n', 4]]

import re

weibo_word_frequency = {}

for question, response in weibo_posts:

words = question[0].split(' ') + response[0].split(' ')

for word in words:

word = word.strip()

# Only include words that consist of Chinese characters.

if re.match(r'[\u4e00-\u9fff]+', word):

if word not in weibo_word_frequency:

weibo_word_frequency[word] = 0

weibo_word_frequency[word] += 1

weibo_word_frequency['北京']

8044

df = pd.DataFrame(data=weibo_word_frequency.items(),

columns=['word', 'occurrences'])

df.head(10)

| word | occurrences | |

|---|---|---|

| 0 | 希望 | 12223 |

| 1 | 九 | 1740 |

| 2 | 哥 | 19073 |

| 3 | 日日 | 414 |

| 4 | 开心 | 14743 |

| 5 | 同 | 5926 |

| 6 | 我 | 644065 |

| 7 | 打 | 21903 |

| 8 | 羽毛 | 310 |

| 9 | 波 | 1156 |

# Add frequency percentiles

df['percentile'] = df.rank(pct=True, numeric_only=True)

# What are the most frequently used words?

df.sort_values('percentile', ascending=False, inplace=True)

df.head(10)

| word | occurrences | percentile | |

|---|---|---|---|

| 76 | 的 | 772887 | 1.000000 |

| 6 | 我 | 644065 | 0.999989 |

| 31 | 你 | 544535 | 0.999979 |

| 107 | 了 | 532542 | 0.999968 |

| 60 | 是 | 378292 | 0.999958 |

| 67 | 啊 | 320625 | 0.999947 |

| 106 | 不 | 249907 | 0.999937 |

| 16 | 哈哈 | 219169 | 0.999926 |

| 66 | 好 | 173661 | 0.999916 |

| 79 | 有 | 152894 | 0.999905 |

# What are the least frequent words?

df.tail(5)

| word | occurrences | percentile | |

|---|---|---|---|

| 68709 | 承龙 | 1 | 0.160445 |

| 18484 | 所街 | 1 | 0.160445 |

| 68707 | 滇东 | 1 | 0.160445 |

| 68703 | 容亮 | 1 | 0.160445 |

| 94863 | 汉献帝 | 1 | 0.160445 |

# Limit list to 2000 words

df = df[:2000]

print(df.describe())

df.head()

occurrences percentile

count 2000.000000 2000.000000

mean 7750.468500 0.989464

std 33741.542328 0.006088

min 880.000000 0.978922

25% 1297.000000 0.984193

50% 2100.000000 0.989464

75% 4473.250000 0.994732

max 772887.000000 1.000000

| word | occurrences | percentile | |

|---|---|---|---|

| 76 | 的 | 772887 | 1.000000 |

| 6 | 我 | 644065 | 0.999989 |

| 31 | 你 | 544535 | 0.999979 |

| 107 | 了 | 532542 | 0.999968 |

| 60 | 是 | 378292 | 0.999958 |

def word_to_pinyin(word):

if not word:

return ''

pin = pinyin.get(word)

return pin

word_to_pinyin('你')

'nǐ'

def word_to_definition(word):

if not word:

return ''

definition = pinyin.cedict.translate_word(word)

if not definition:

return ''

return '<br/>'.join(list(definition))

word_to_definition('是')

'variant of 是[shi4]<br/>(used in given names)'

df['pinyin'] = df['word'].apply(word_to_pinyin)

df['definition'] = df['word'].apply(word_to_definition)

df['back'] = df['word'].apply(lambda word: '<b>'+word_to_pinyin(word)+'</b><br/>'+word_to_definition(word))

df[['word', 'pinyin', 'percentile', 'definition']].head(50)

| word | pinyin | percentile | definition | |

|---|---|---|---|---|

| 76 | 的 | de | 1.000000 | aim<br/>clear |

| 6 | 我 | wǒ | 0.999989 | I<br/>me<br/>my |

| 31 | 你 | nǐ | 0.999979 | you (informal, as opposed to courteous 您[nin2]) |

| 107 | 了 | le | 0.999968 | unofficial variant of 瞭[liao4] |

| 60 | 是 | shì | 0.999958 | variant of 是[shi4]<br/>(used in given names) |

| 67 | 啊 | a | 0.999947 | modal particle ending sentence, showing affirm... |

| 106 | 不 | bù | 0.999937 | (negative prefix)<br/>not<br/>no |

| 16 | 哈哈 | hāhā | 0.999926 | (onom.) laughing out loud |

| 66 | 好 | hǎo | 0.999916 | to be fond of<br/>to have a tendency to<br/>to... |

| 79 | 有 | yǒu | 0.999905 | to have<br/>there is<br/>there are<br/>to exis... |

| 53 | 都 | dū | 0.999895 | capital city<br/>metropolis |

| 81 | 就 | jìu | 0.999884 | at once<br/>right away<br/>only<br/>just (emph... |

| 148 | 也 | yě | 0.999874 | also<br/>too<br/>(in Classical Chinese) final ... |

| 84 | 在 | zài | 0.999863 | (located) at<br/>(to be) in<br/>to exist<br/>i... |

| 37 | 这 | zhè | 0.999852 | this<br/>these<br/>(commonly pr. [zhei4] befor... |

| 69 | 没 | méi | 0.999842 | drowned<br/>to end<br/>to die<br/>to inundate |

| 26 | 去 | qù | 0.999831 | to go<br/>to go to (a place)<br/>(of a time et... |

| 289 | 很 | hěn | 0.999821 | (adverb of degree)<br/>quite<br/>very<br/>awfully |

| 133 | 人 | rén | 0.999810 | man<br/>person<br/>people<br/>CL:個|个[ge4],位[wei4] |

| 63 | 吧 | ba | 0.999800 | (modal particle indicating suggestion or surmi... |

| 40 | 要 | yào | 0.999789 | important<br/>vital<br/>to want<br/>to ask for... |

| 65 | 还 | huán | 0.999779 | to pay back<br/>to return |

| 232 | 说 | shuō | 0.999768 | to speak<br/>to say<br/>to explain<br/>to scol... |

| 195 | 一 | yī | 0.999758 | one<br/>1<br/>single<br/>a (article)<br/>as so... |

| 204 | 那 | nà | 0.999747 | (archaic) many<br/>beautiful<br/>how<br/>old v... |

| 189 | 个 | gè | 0.999736 | variant of 個|个[ge4] |

| 73 | 看 | kàn | 0.999726 | to see<br/>to look at<br/>to read<br/>to watch... |

| 29 | 呢 | ní | 0.999715 | woolen material |

| 142 | 啦 | la | 0.999705 | sentence-final particle, contraction of 了啊, in... |

| 129 | 哈 | hā | 0.999694 | a Pekinese<br/>a pug<br/>(dialect) to scold |

| 54 | 不是 | bùshì | 0.999684 | no<br/>is not<br/>not |

| 49 | 吃 | chī | 0.999673 | variant of 吃[chi1] |

| 92 | 想 | xiǎng | 0.999663 | to think<br/>to believe<br/>to suppose<br/>to ... |

| 372 | 吗 | ma | 0.999652 | (question particle for "yes-no" questions) |

| 155 | 太 | tài | 0.999642 | highest<br/>greatest<br/>too (much)<br/>very<b... |

| 89 | 来 | lái | 0.999631 | to come<br/>to arrive<br/>to come round<br/>ev... |

| 589 | 什么 | shíyāo | 0.999621 | what?<br/>who?<br/>something<br/>anything |

| 120 | 他 | tā | 0.999610 | he or him<br/>(used for either sex when the se... |

| 328 | 我们 | wǒmen | 0.999599 | we<br/>us<br/>ourselves<br/>our |

| 93 | 会 | hùi | 0.999589 | to balance an account<br/>accountancy<br/>acco... |

| 205 | 就是 | jìushì | 0.999578 | (emphasizes that sth is precisely or exactly a... |

| 316 | 呀 | yā | 0.999568 | (particle equivalent to 啊 after a vowel, expre... |

| 687 | 给 | gěi | 0.999557 | to supply<br/>to provide |

| 71 | 怎么 | zěnyāo | 0.999547 | variant of 怎麼|怎么[zen3 me5] |

| 105 | 得 | dé | 0.999536 | to have to<br/>must<br/>ought to<br/>to need to |

| 12 | 哦 | é | 0.999526 | sentence-final particle that conveys informali... |

| 383 | 这个 | zhègè | 0.999515 | this<br/>this one |

| 110 | 到 | dào | 0.999505 | to (a place)<br/>until (a time)<br/>up to<br/>... |

| 46 | 么 | yāo | 0.999494 | variant of 麼|么[me5] |

| 261 | 多 | duō | 0.999483 | many<br/>much<br/>often<br/>a lot of<br/>numer... |

df.to_csv('most_common_5k_chinese_words_v6.csv')

!du -sh /content/most_common_5k_chinese_words_v6.csv

372K /content/most_common_5k_chinese_words_v6.csv